The grid is built for the peak

And that actually gives us slack to build into

Imagine you are appointed to run an ice cream company, complete with factory and delivery trucks. Now, imagine that this ice cream is so good–so vital to the people of your community–that the last time you ran out on a hot summer day people freaked out and elected Mr Freeze as governor. It is very good ice cream.

How would you avoid ever running out?

Well, you would build extra capacity. People mostly want frozen desserts in summer, so you could overbuild your factory to prepare for the busy season. You could buy extra delivery trucks, custom-made for efficient trips to neighborhoods and businesses. You could try to build some freezers to stockpile, but you worry the craving for sweet treats is too great for storage to do much good.

Worse: all of this is starting to get pricey. People will complain if their dessert is too expensive, something about you ‘ruining their childhood’.

The cherry on top: there are new people moving to town. You’re not sure how much ice cream they’ll want, or even where they’re going to live and how many more trucks you’ll need. It’s nearly impossible to make everyone happy.

—

As crazy as it sounds, this is the position utilities and grid operators find themselves in.

The whole electric system is built to serve peak demand. Billions of dollars are deployed to keep the lights on and the A/C humming during the hottest minutes and hours of the year.

This is harder because electricity is more complicated than ice cream[citation needed]. Electricity is expensive to store, and generation needs to be finely balanced1 with fluctuating loads (uses of power, from phone chargers to foundries). If this balance gets too far out of whack, even for a short time, the whole system can collapse2.

This can happen in an uncontrolled way, but more commonly a grid operator will take parts of the system offline in a rolling black out3 to save the rest. This happened repeatedly in California in the early 2000’s. People were so angry about this failure to balance generation and load that they recalled the governor and elected Arnold Schwarzenegger.

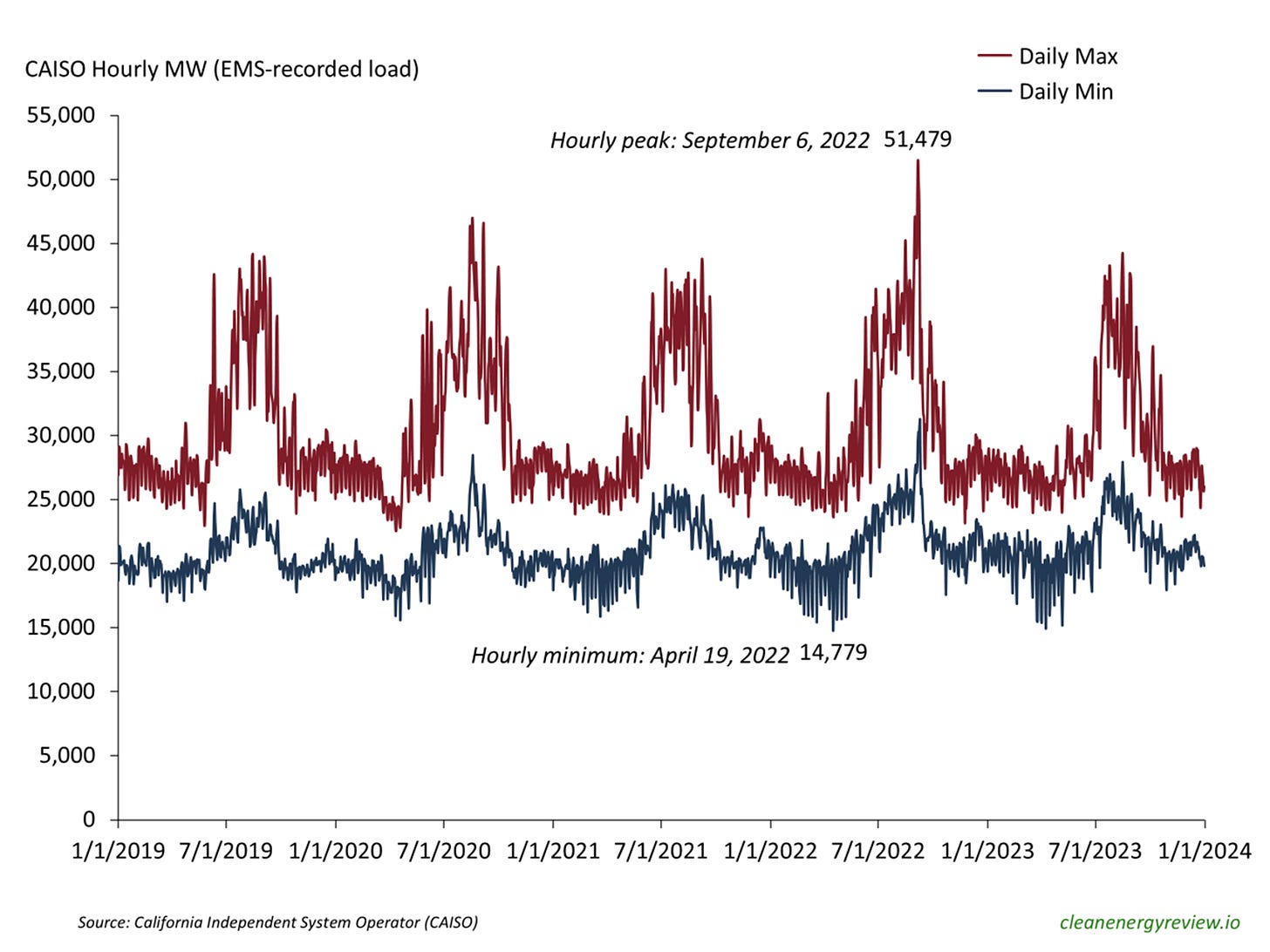

The upshot is that the system is actually pretty under-utilized by design, with spare capacity more than 99.99% of the time. Over the last 5 years, California’s average load has been just ~50% of its peak at 4:57pm on September 6, 2022

This fat right tail (in green) is what everyone is planning for, and why there is so much ‘extra’ capacity.

Imagine that you, an ice-cream-magnate-turned-grid-operator, have been bopping along for years (more than a decade actually) without getting close to 50 gigawatts (GW) in coincident load. Then, a heat wave in early September pushes you above 51 GW in load and threatens rolling black outs. Suddenly you’re sending out an emergency alert asking people to pretty please turn off their A/C.

To complicate matters further, load is now growing for the first time in 20 years, putting more strain on the system during peak hours. Datacenters (which mostly run 24/7) and EVs (which might charge during peak times) are some of the biggest worries for grid planners.

These worries are not idle: a datacenter plopped down in a suburb might request the same 200 megawatt (MW) interconnection as a steel mill’s electric arc furnace; a charger for a semi-truck can draw the same 1MW as a small factory, but ramp up instantaneously4.

Couple this with aging equipment, and maybe it is not super surprising that electricity rates are rising?

–

But wait, there’s more!

The balancing of generation and load only really covers the ‘factory’ portion of our example. What about the ice cream trucks?

For electricity, these are the transmission and distribution lines that carry power to homes and businesses, alongside the transformers, switches, fuses, and circuit breakers that link them all together.

This equipment is also capacity limited, but by heat rather than real-time balancing.

Overloading an ice cream truck will make it break down; pushing too many electrons through a wire will make it melt.

To protect against this, individual pieces of equipment are limited in how many electrons—how much current—they are rated5 to carry. To stay safely within this rating during peaks, the distribution and transmission system might be planned6 against a ‘1 in 10 heat storm’ (to reflect high loading and high ambient temperatures), or an N-2 emergency situation (where 2 key nodes are out).

Extreme heat from climate change is expected to further limit thermal capacity during peak moments, requiring additional buffers.

The result is further under-utilized capacity in transmission and distribution equipment, while expensive upgrades are undertaken to manage through temporary spikes.

—

This all sounds pretty bad. We have a system focused on managing through its worst moments, at the cost of unused capacity the rest of the time, seeing increasing demand for the first time in a generation.

But this is also great news; there is lots of capacity in the existing system!

There is disproportionate value in things that bring down the peak (or at least avoid making it worse), allowing us to better use what we already have. And, increasingly, we have the tools and technologies to do this.

More and more homes have smart devices–thermostats, water heaters, stoves–that can shift their load to help avoid daily and seasonal peaks. Home batteries and EVs can increasingly feed power back into the system at key times. At commercial and industrial scales, microgrids with onsite generation and batteries can shift huge amounts of load temporarily.

These distributed energy resources (DERs) are the equivalent of installing ice cream fridges in homes and businesses: by moving capacity and storage to the edges of the system, the whole thing can be used more efficiently.

To be sure, these are no panacea. In particular, planning around these new technologies, many of which are just now being deployed at scale, is particularly uncertain. Without knowing how they will perform in practice, it is risky to rely on these tools to defer more traditional investments.

Further, because of the complexity involved, new software stacks are needed to orchestrate things at scale. Nor are technology solutions sufficient, regulatory and market structure evolution will also be necessary.

But cost pressures and customer expectations will push utilities and grid operators to better use existing capacity, if only to appease customers that want to charge their new EV or train the next ChatGPT. Done right, we could even be able to bring rates per kWh down (or at least help it rise less quickly).

We should embrace the peak and the buildout it has forced. We can use the spare capacity to enable our electrified future.

In my next post, I’ll talk a bit more about what this means from a business model perspective, particularly for all the new companies trying to carve out pieces of this opportunity.

Thermal capacity (a bit more detail for those who care)

Electrical power (generally measured in watts) is a function of voltage (measured in volts) and current (measured in amperes, or amps).

Power = Voltage x Current

Different parts of the grid operate at different, but specific, voltages. That might mean 500,000 volts for a long-distance transmission line, 12,000 volts for a local distribution circuit through a neighborhood, or the 120 volts at your wall outlet (if you’re in North America).

Because these target voltages are fixed by the system design, to get more power the current must increase. This is a challenge, because increasing the current will increase the resistance—the ‘friction’ of electron flow—which means more heat.

Electrical resistance (measured in Ohms) is a function of voltage and current.

Resistance = Current / Voltage

Incidentally, this is why long-distance transmission lines are run at higher voltages: the resistance (and thus the line loss) is reduced for a given level of current.

This heat is the key constraint on the individual components that comprise the grid–wires, switches, and transformers. This makes heat the constraint on moving energy around within the system.

For example, overhead lines sag between the power poles they hang on. Raise the current, and thus the heat, and the line will sag more. Add enough current and the line might sag into a tree (causing a ground fault, starting a fire, etc.). In theory, if the current goes high enough, the wire will fall apart under its own weight.

This looks a little different for each component, but none will fail as immediately as the overall system under a load mismatch.

There are a number of ingenious ways this was accomplished in a time before computers, from synchronous condensers (giant flywheels spinning in vacuum) to the use of centrifugal governors at power plants that operated without human input. Coupled with the inertia from rotating generation, these could be enough to ride through minor fluctuations in load without bigger issues.

I am eliding a lot of detail here to keep things moving. The paradigm I’m thinking about is a drop in frequency outside the operating limits of key generators, causing them to trip off as part of a cascading failure. In practice, generators are dispersed so this often also involves thermal capacity limits on transmission equipment (as in the Northeast blackout of 2003, where a generator tripped off, causing a line to sag and ground fault, shifting loads to other lines that couldn’t handle it, resulting in a cascading failure; computer issues at the grid operator also contributed to this cascade).

Or a ‘rotating power outage’ if you’re an ISO or regulator.

In a way, EVs are actually harder to deal with than datacenters, because it is not yet obvious what times of day or how often they might be charged, making it doubly hard to plan for (without being overly conservative). This challenge drives the appeal behind EV managed charging startups like Weavegrid and Optiwatt, and planning / operations startups like Camus.

These limits can sometimes be flexed during emergency operations (most have continuous versus temporary ratings), but there is ultimately protection equipment like fuses and circuit breakers that open under sufficiently high currents to keep the underlying equipment from getting damaged.

Incidentally, it is this planning process, and the load flow studies required to understand what upgrades might be required, that is the real bottleneck for new interconnections—not the overall generation / load balancing.